It’s been some time since I have seen any new solution or tool that is rolled out without the buzz word “Artificial Intelligence” in it. While AI is not new and it has been around for over 60 years, every tech company now would like to label themselves as an AI company or collaborate with partners who are already doing well in the next phase of intelligence computing. Not sure, either they really want to embrace what AI has to offer or simply seeking attention and captivate the audience, but there’s been a mad rush and everyone seems to love to jump on to the bandwagon.

I’m no exception to this, so here’s my blog on AI and you are here already reading this. Thanks to AI!

Demystifying AI – It’s not just a Buzz!

Firstly, we can all agree that the recent advancements made technology openly available to everyone at reasonable or zero cost.

Another reason is the availability of humungous data among us. People just wanted to use technology on the available data for meaning references and decision making. When someone refers to AI today, most likely they are referring to Natural Language Processing (NLP), Machine Learning or at most Deep learning, or a combination of these to provide one of these capabilities.

- Learning – Acquisition of information and rules for using the information

- Reasoning – Using rules to reach approximate or definite conclusions

- Prediction – Using past and current information to predict future

- Self-correction or Optimization – Learn and correct information

AI in Software Testing

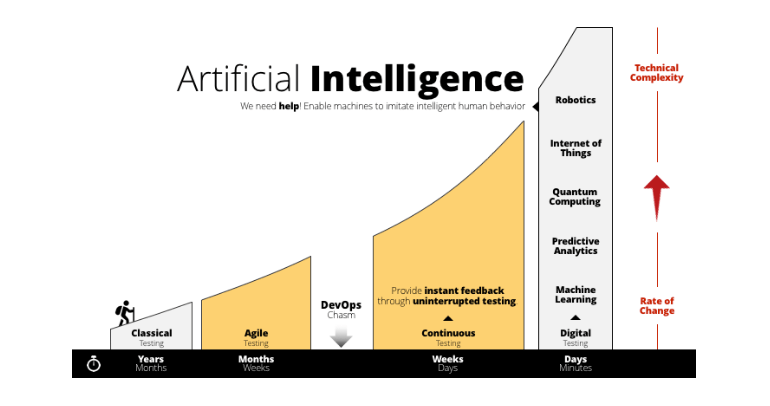

In the last decade or so, software testing has evolved more than ever. Especially, test automation has changed from a specialized skillset to a basic necessity for every tester now. Other than a very few niche industries, manual testing as a trade is already obsolete. While exploratory testing is still a key for each application and system under test, there are very few companies that hire explicit manual testers for such testing. Either they make use of business analysts with extensive product knowledge or automation engineers can perform exploratory testing. In fact, the testing community currently is open to adopting new technology even before their development teams can get their hands on to it. This is very evident from so many test automation tools in the market that has introduced some kind of artificial intelligence to their tool base.

Currently, AI is used in testing tools anywhere from creating tests, running them, and analyze their results as well. Enabling code less automation, automated test case creation, improved test capability, shifting testing left, reducing time to test, predictive analytics, automated bug tracking, continuous testing, tighter integration with code are some of the common themes found. Some of them are listed below.

Create Tests:

- While there are automated test tools right from Selenium IDE or UFT which can record and playback user actions, tools now can intelligently create test cases and document test steps as well thereby reducing the manual effort involved in documenting tests. Example – Functionize.

- Minimize the maintenance of automation scripts by automatically detecting the new visual changes in the application under test and either notify or automatically update the object repository. Example – Test Craft

Run Tests:

- Predictive Test Selection algorithms can help in identifying and choosing the tests that must be run for the current change rather than running all tests. The prediction could be from the combination of application(s) changes, past results, bug fixes, open issues, etc.

- Improved image recognition and/or comparison. In contrast to pixel to pixel comparison of the entire image captured, tools boast comparing and highlighting only meaningful differences. Example – Applitools

Reporting:

- Automated bug tracking using predictive analytics to segregate real issues from false positives and remove the painful process of going through the exceptions and error logs.

- Real-time analytics of test execution, classify, and tag new and similar issues based on the historical bug data. Example – reportportal.io

Is it really AI?

If you are already asking, test automation tools might already be doing all these for years and why do we need AI? The simple answer is they are doing the same better, faster, and with fewer limitations now using the so-called AI. But is it really Artificial Intelligence that these tools are using? It cannot be a resounding Yes. To me, if there is no self-learning involved, it’s not AI but more of an advanced algorithm, and only a very small set of tools out there will come close to using real AI.

Finally, I think AI has huge potential in the software testing spectrum to improve testing efficiency and effectiveness. It can take the test automation to a whole new level from being just assistance and provide full autonomy but it has a long way to go. But I think it shouldn’t end up as a forceful adaptation and unwanted distraction as often most complex problems have a simple solution and AI might not be the only one or even be the first option. So, as with any technology, testers and their team need to evaluate the problem in hand, probable solutions and take an informed decision to use AI or not.

Read more about other software testing blogs here.

What do you think about accessibility testing and its importance? Leave your comments here or on Twitter @testingchief.